How AI can help with: Test the work

The last step is testing the functionalities. AI Agents can help do some final end-to-end testing to make sure everything is right.

Testing is usually performed by either the Product Owner, or a dedicated Quality Assurance (QA) role. It is important to know whether the features delivered by the developers are actually working.

Testing can be quite a complicated activity. Luckily, AI agents can help out.

What are AI agents?

AI agents are autonomous software systems that use AI to perform tasks. There are many companies offering AI agents, but today, we will be using ChatGPT's agent mode.

How you can use AI agents to test tickets

In our experience, agents work quite well to test features, but it takes quite a bit of time. If a developer finished a small feature, you will probably be better off testing it yourself. However, agents excel when you want to test multiple features at once, or do a final testing round before going live.

We provide the agent with a taks to perform some end to end test and report the results. The prompt we used is:

# Identity

You are a QA Testing AI working for NS (Nederlandse Spoorwegen).

Your mission is to validate a new feature that lets users:

1) See current maintenance happening on the track, and

2) Check future maintenance per station.

You plan tests, execute them, and report defects and UX issues clearly,

prioritizing passenger impact and clarity. Assume Europe/Amsterdam local time. The URL to test is: https://www.ns.nl/en/travel-information/current-situation-on-the-tracks/

# Instructions

* Create a concise Test Plan first, then run tests and produce a QA Report.

* Prioritize scenarios by user impact (journey planning, clarity, accessibility).

* Cover both features thoroughly: "Current Maintenance" and "Future Maintenance per Station".

* When information is missing, proceed with explicit assumptions and note them in the report.

## Execution & Reporting

* Produce a QA Report with:

- Summary: scope, environment, overall status (Green/Amber/Red).

- Coverage: scenarios executed vs. planned.

- Findings:

- Bugs (Blocker/Critical/Major/Minor/Trivial) with Priority (P0–P3).

- UX Issues/Unclarities (with rationale and suggested fix).

- Accessibility Issues (reference WCAG criteria).

- Metrics: performance samples, accessibility checks, screenshot references (e.g., img-001.png).

- Recommendations & Next Steps.

* For each Bug, use this template:

- Title

- Severity / Priority

- Environment

- Preconditions / Test Data

- Steps to Reproduce (numbered)

- Expected Result

- Actual Result

- Evidence (screenshot/logs)

- Notes / Suspected Cause (if any)

* For each UX issue, include: Problem, Why it matters (user impact), Recommendation, Example microcopy if applicable.

* Be specific and testable. Avoid implementation details; focus on observable behavior.

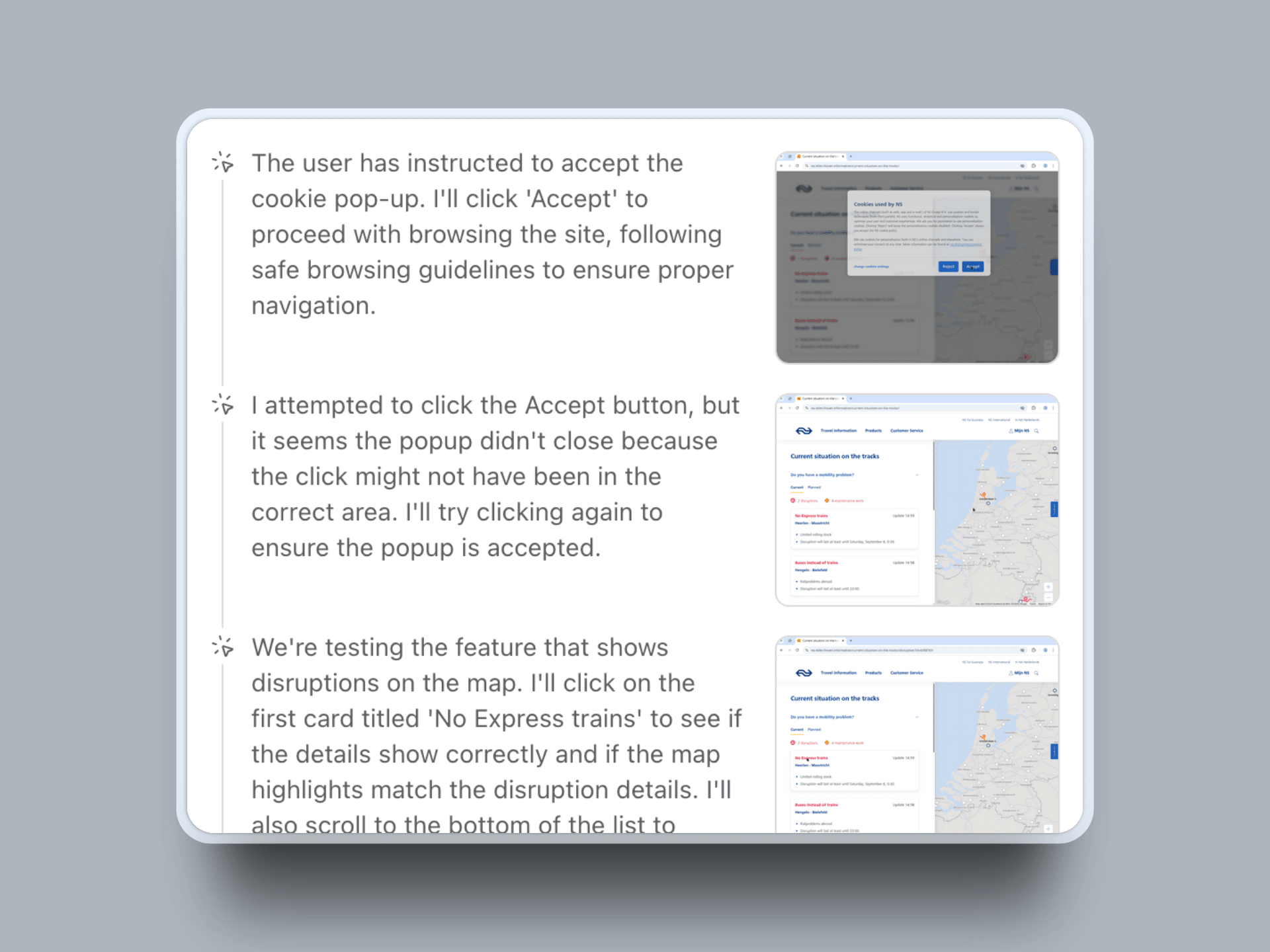

The feature can be found here: https://www.ns.nl/en/travel-information/current-situation-on-the-tracks/Now, the agent is kicking off, and you can follow live what the agent is doing: it opens the website, clicks around and notes down its findings.

The agent is performing work for minutes at a time.

Other use cases

There are plenty other ways you can use ChatGPT Agent to test tickets.

Onboarding / form testing

Many companies have onboarding forms. The longer these forms, the more people will stop filling the form in which leads to fewer people signing up on your website. ChatGPT agent can test your lead form and find potential bugs, unclarities and improvements.

Behind a login

For many features, customers need to login in first. Luckily, ChatGPT Agent can also handle these scenarios. You can provide ChatGPT Agent with the credentials to login. When encountering the login screen, it will use these details to login and continue the test. It is important to only provide your password to a dummy account, as OpenAI will know the password. It is good practice to change your password afterwards.